Summary:

The idea of placing AI data centers in space is driven by energy limits, scaling pressure, and strategic control over future computing infrastructure. As artificial intelligence systems demand unprecedented power and reliability, orbital environments offer constant solar energy, thermal advantages, and independence from terrestrial constraints. This concept reflects a broader push to rethink where and how large-scale AI computing should exist as demand accelerates beyond Earth-bound limits.

When Earth Becomes the Bottleneck

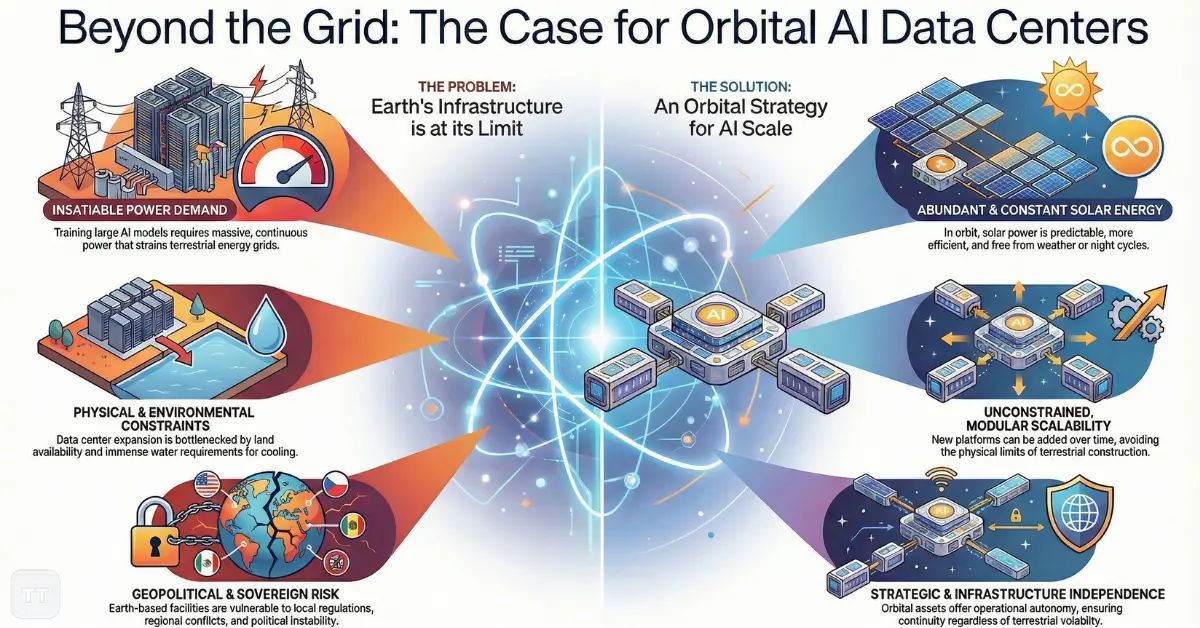

Artificial intelligence development is increasingly constrained not by algorithms, but by infrastructure. Training large models now requires massive clusters of specialized hardware, enormous power input, and stable cooling environments. On Earth, these requirements collide with real-world limits: grid capacity, land availability, environmental regulations, geopolitical friction, and rising energy costs.

Most discussions about AI scalability assume that data centers will continue expanding across deserts, rural regions, or underwater facilities. What often goes unexamined is whether Earth itself becomes the limiting factor. The interest in moving parts of AI computing infrastructure into orbit reflects a more radical conclusion: future AI growth may require stepping outside the planet altogether.

The motivation is not science fiction aesthetics. It is a calculated response to energy physics, long-term scale economics, and strategic autonomy.

The Energy Equation Driving AI Beyond Earth

AI workloads differ fundamentally from traditional computing. Training and inference at scale require continuous, predictable power delivery at levels that challenge existing grids. Even regions rich in renewable energy face intermittency, transmission losses, and political opposition to massive new facilities.

In orbit, the energy profile changes completely. Solar exposure is nearly constant, free from night cycles, weather disruptions, or seasonal variation. Large orbital platforms can harvest solar energy at efficiency levels that terrestrial installations cannot reliably match. The absence of atmospheric filtering alone provides a significant gain in usable power.

More importantly, space-based energy scales linearly. Adding capacity does not require negotiating land rights, upgrading regional grids, or competing with residential and industrial demand. The constraint becomes engineering and launch economics rather than local politics or geography.

This reframes energy not as a bottleneck to be managed, but as a controllable variable.

Why AI Workloads Are Uniquely Suited to Orbit

Not all computing belongs in space. Latency-sensitive applications, consumer-facing services, and real-time control systems remain Earth-dependent. AI model training, however, is different.

Training large models prioritizes throughput over immediacy. Jobs run for days or weeks, consume enormous power, and tolerate higher latency as long as data transfer remains reliable. Inference workloads for batch processing, scientific modeling, or large-scale simulation share similar characteristics.

Orbital environments also simplify thermal management. Heat dissipation, one of the most expensive aspects of data center operation, becomes more manageable when radiative cooling can be designed without atmospheric constraints. This reduces reliance on water cooling, chillers, or energy-intensive airflow systems.

The result is an environment naturally aligned with the physics of AI computation rather than one constantly fighting against them.

Scale as the Primary Strategic Driver

The defining challenge of advanced AI is scale. Each new generation of models requires exponentially more compute, not incrementally more. This scaling pressure creates structural stress across supply chains, power grids, and regulatory frameworks.

Orbital data centers introduce a different scaling paradigm. Capacity can expand modularly, with additional platforms added over time rather than building increasingly massive terrestrial complexes. This aligns with how space infrastructure already evolves: incremental, distributed, and engineered for redundancy.

The strategic implication is significant. Control over scalable compute becomes less dependent on national infrastructure and more tied to orbital assets. This shifts AI capability from being constrained by local policy to being governed by space-access capability and orbital logistics.

Control, Sovereignty, and Infrastructure Independence

One of the least discussed motivations is control. Large AI systems increasingly shape economic power, military planning, and information ecosystems. Hosting the infrastructure that runs them inside national borders exposes it to regulation, taxation, political pressure, and, in some regions, instability.

Placing AI computing infrastructure in orbit introduces a form of infrastructural sovereignty. While not legally ungoverned, space-based assets operate under a different set of treaties and enforcement realities. This reduces exposure to localized disruptions such as energy shortages, land disputes, or regional conflict.

For technology leaders focused on long-term autonomy, this control layer may matter as much as energy efficiency. It ensures continuity of capability regardless of terrestrial volatility.

The Role of Launch Economics and Reusability

A decade ago, the cost of placing heavy computing infrastructure into orbit would have been prohibitive. That assumption no longer holds. Reusable launch systems, higher payload efficiency, and declining per-kilogram costs have fundamentally altered the equation.

As launch frequency increases, orbital maintenance and hardware replacement become viable. Data center components can be upgraded rather than abandoned. This is critical for AI workloads, where hardware obsolescence happens quickly.

The strategy relies on a virtuous cycle: lower launch costs enable orbital infrastructure, which in turn justifies higher launch cadence, further reducing costs. This feedback loop mirrors how cloud computing evolved on Earth, but compressed into a space-access framework.

Orbital Placement and Architectural Tradeoffs

Not all orbits are equally suitable for AI computing infrastructure. Low Earth orbit offers lower latency and easier maintenance but faces congestion and debris risks. Higher orbits provide stability and solar exposure but increase communication delay.

These tradeoffs suggest a layered architecture. Training clusters may operate in higher, more stable orbits where energy harvesting is optimized, while supporting systems remain closer to Earth for data relay and coordination.

This distributed model also improves resilience. Failure in one orbital segment does not collapse the entire system, aligning with fault-tolerant design principles already common in cloud architecture.

Data Movement: The Overlooked Constraint

Energy and compute often dominate the conversation, but data movement is equally critical. Training AI models requires transferring massive datasets between Earth and orbit. Bandwidth limits, transmission costs, and security risks complicate this step.

The practical response is selective migration. Not all data needs to move. Preprocessed datasets, synthetic data generation, and model weights can be staged efficiently. Over time, orbital systems may host their own data generation pipelines, reducing dependence on constant Earth uplink.

This gradual decoupling mirrors how cloud platforms reduced reliance on on-premise infrastructure over time rather than all at once.

Environmental Implications Beyond the Headlines

Space-based computing is often framed as environmentally friendly due to reduced land and water use. That framing is incomplete. Launch emissions, orbital debris risk, and end-of-life disposal all carry environmental costs.

However, when compared to the long-term footprint of terrestrial mega–data centers, the balance becomes more nuanced. Eliminating freshwater consumption, reducing grid strain, and avoiding land disruption may offset launch-related impacts over decades of operation.

The environmental case depends less on optics and more on lifecycle analysis. If orbital systems operate continuously for years with minimal resupply, their relative impact improves significantly.

Security and Reliability in an Orbital Context

Physical security changes dramatically in space. Orbital data centers are immune to many terrestrial threats but exposed to others, including space weather, debris collision, and potential anti-satellite actions.

Mitigation strategies already exist: redundancy, shielding, orbital maneuvering, and distributed architectures. Importantly, AI workloads are inherently tolerant of redundancy. Training jobs can be checkpointed, migrated, or restarted without catastrophic loss.

From a reliability perspective, the absence of weather, earthquakes, and grid failures may actually increase long-term uptime compared to Earth-based facilities.

Adoption Signals and Industry Momentum

While the concept remains early, signals of interest are visible. Space agencies, cloud providers, and defense contractors are exploring orbital computing prototypes. Advances in in-space manufacturing and assembly further support feasibility.

What differentiates this strategy is not novelty, but alignment with existing trends: rising AI energy demand, decentralization of infrastructure, and commercialization of space operations. Adoption is likely to begin with specialized workloads before expanding to broader AI ecosystems.

At that stage, orbital computing stops being experimental and becomes strategic.

Common Misconceptions That Distort the Debate

One misconception is that space-based AI computing aims to replace terrestrial data centers. It does not. The more realistic model is augmentation. Orbit handles the workloads Earth struggles to sustain efficiently.

Another misunderstanding is that latency makes orbital AI unusable. For many AI tasks, latency is secondary to throughput. The assumption that all compute must be near users oversimplifies how AI systems actually operate.

Correcting these misconceptions clarifies why the strategy is not a gimmick, but a targeted response to specific constraints.

Who This Strategy Actually Benefits

This approach primarily benefits organizations operating at extreme scale: frontier AI labs, national research programs, and platforms training models measured in trillions of parameters. Smaller companies gain indirectly through reduced pressure on terrestrial infrastructure and more diversified compute markets.

It is less relevant for startups running lightweight inference or consumer applications. For them, traditional cloud remains more practical. The value emerges at the intersection of energy intensity, long training cycles, and long-term strategic planning.

Frequently Asked Questions

Why consider AI data centers in space instead of expanding on Earth?

Because energy availability, cooling, and land constraints increasingly limit Earth-based expansion. Space offers constant solar power and fewer physical bottlenecks for extreme-scale workloads.

Does orbital AI computing eliminate environmental concerns?

No. It shifts them. While water and land use decrease, launch emissions and debris management become important tradeoffs that must be addressed.

Is latency a deal-breaker for AI in orbit?

For real-time applications, yes. For training and batch inference, latency is far less critical than throughput and reliability.

Are space-based data centers secure?

They face different risks than terrestrial facilities. Redundancy, distribution, and fault tolerance mitigate many of these challenges.

When could this become operational at scale?

Initial deployments are likely within this decade, with gradual expansion as launch economics and in-space assembly mature.

Conclusion: A Strategy Rooted in Physics, Not Hype

The push to place AI computing infrastructure in orbit reflects a sober assessment of limits, not a fascination with spectacle. Energy density, scalability, and control increasingly shape what is possible in artificial intelligence. When those factors collide with planetary constraints, the logical response is to change the environment.

Whether this strategy becomes dominant remains uncertain. What is clear is that the future of AI infrastructure will not be confined to familiar data center footprints. As compute demands accelerate, the boundary between Earth and orbit may become less a barrier and more a design choice.