Mark Zuckerberg’s Meta AI smart glasses demo failure may seem embarrassing for the tech giant, but with the right understanding of what went wrong, you can learn valuable lessons about live tech demonstrations and the challenges of cutting-edge AI technology in a simple way.

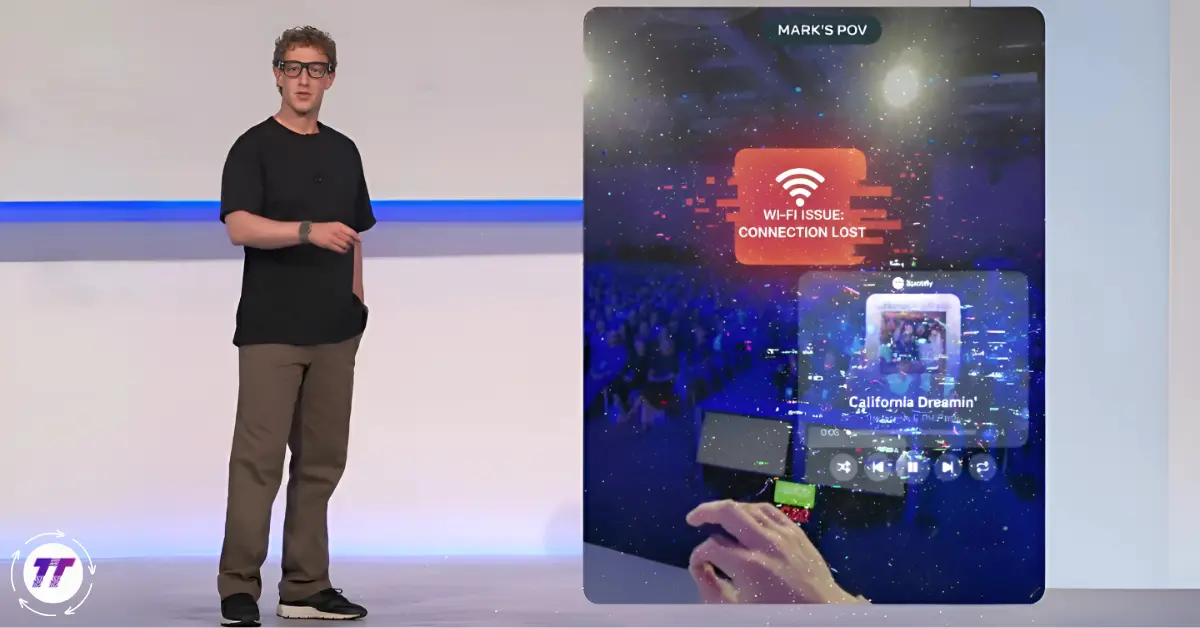

The highly anticipated Meta Connect 2025 event took an unexpected turn when Mark Zuckerberg’s live demonstration of Meta’s newest AI-powered smart glasses experienced multiple technical failures, leading to awkward moments and the now-infamous “Wi-Fi blame” excuse that has become a trending topic across tech communities.

What Happened During the Meta AI Smart Glasses Demo?

The Cooking Demo Disaster

The first major failure occurred during a cooking demonstration featuring Meta’s Live AI technology integrated into their smart glasses. During a cooking segment, the glasses’ live AI misinterpreted prompts, insisted base ingredients were already combined, and suggested steps for a sauce that hadn’t been started before the demonstration was abruptly halted.

The cooking content creator working alongside Zuckerberg attempted to ask the Meta AI assistant simple questions like “What do I do first?” but the system repeatedly failed to provide coherent responses. Instead of following the natural cooking process, the AI assistant kept skipping ahead, creating confusion and demonstrating the limitations of current AI technology in real-world applications.

The Neural Band Breakdown

The second significant failure involved Meta’s Neural Band technology, another cutting-edge feature that was supposed to showcase the company’s advancement in wearable computing. The second stumble came during a showcase of the Neural Band, adding to the series of technical difficulties that plagued the entire presentation.

During this segment, the ringtone continued to play across a deathly-silent hall, despite his best efforts to control the device. The malfunction created an uncomfortable atmosphere in the auditorium, with Zuckerberg visibly struggling to regain control of the demonstration.

Mark Zuckerberg’s Response: Blaming the Wi-Fi

The Immediate Reaction

When faced with these technical failures, both Mark Zuckerberg and his team quickly pointed fingers at the venue’s internet connectivity. “The irony of the whole thing is that you spend years making technology and then the Wi-Fi of the…” Zuckerberg remarked, trying to maintain composure while addressing the audience.

The Wi-Fi excuse became the go-to explanation for the demo failures, with Mancuso closed down the demo to raucous laughter, then applause from Zuckerberg’s audience. “It’s all good,” Mark Zuckerberg said, a sheepish grin on his face. This moment highlighted the vulnerability of even the most advanced technology companies when conducting live demonstrations.

Public Reaction and Media Coverage

The incident quickly gained traction across social media platforms and tech news outlets, with many questioning whether the Wi-Fi explanation was legitimate or simply a convenient scapegoat. The segment was abruptly cut short after the panicked employee and a visibly flustered Mark Zuckerberg blamed the AI’s failure on “bad wifi,” a flimsy excuse met with pity cheers from the audience.

The tech community’s response was mixed, with some showing sympathy for the challenges of live demonstrations while others criticized Meta for not having adequate backup systems in place for such a high-stakes presentation.

The Real Technical Explanation: Beyond Wi-Fi

Meta CTO’s Official Response

Hours after the incident, Andrew Bosworth, Meta’s Chief Technology Officer, provided a more detailed explanation through an Instagram AMA session. The CTO said this was a “race condition” bug, or where the outcome depends on the unpredictable and uncoordinated timing of two or more different processes trying to use the same resource simultaneously. “We’ve never run into that bug before,” Bosworth noted.

This technical explanation reveals that the failures weren’t actually related to Wi-Fi connectivity issues but rather to complex software bugs that occurred during the live demonstration environment. Meta’s Chief Technology Officer, Andrew Bosworth, said in an AMA on Instagram that they were demo failures and not actual product failures.

Understanding Race Condition Bugs

Race condition bugs are among the most challenging technical issues in software development, particularly when dealing with AI systems that process multiple inputs simultaneously. These bugs occur when:

- Multiple processes attempt to access the same system resource

- The timing of these processes becomes unpredictable

- The system cannot properly coordinate between different functions

- The outcome becomes dependent on which process completes first

For Meta’s AI-powered smart glasses, this type of bug could affect various components including the camera, audio processing, AI inference engine, and display systems all trying to work together in real-time.

The Technology Behind Meta’s Smart Glasses

Features and Specifications

The Meta Ray-Ban Display glasses that failed during the demonstration represent a significant advancement in wearable technology. The new glasses can capture video in up to 3K resolution and feature a 12-megapixel camera with a 122-degree wide-angle lens. The glasses have an IP67 dust and water resistance rating for use during intense workouts.

Key features of the new smart glasses include:

- 3K video recording capability for high-quality content creation

- 12-megapixel camera with ultra-wide-angle lens

- IP67 water and dust resistance for outdoor activities

- Oakley PRIZM lens technology for enhanced visual experience

- Integrated AI assistant for voice commands and real-time assistance

- Live AI functionality for interactive experiences

Pricing and Market Positioning

The device, expected to cost $800, positions Meta’s smart glasses in the premium wearable technology market. This pricing strategy reflects the company’s confidence in the advanced features and technology integration, despite the demonstration setbacks.

The Broader Impact on Meta’s AI Strategy

Challenges in AI Development

The demo failures highlight the inherent challenges in developing consumer-ready AI technology. While Meta has made significant investments in artificial intelligence research and development, translating laboratory success into reliable consumer products remains a complex challenge.

The incident demonstrates several key issues facing AI companies:

- Real-world performance variability compared to controlled testing environments

- Integration complexity when combining multiple AI systems

- User experience challenges in natural language processing

- Hardware-software coordination in wearable devices

- Live demonstration risks for cutting-edge technology

Meta’s Response Strategy

Following the incident, Meta has taken several steps to address the situation:

- Transparent communication about the technical causes

- Continued development of the underlying technology

- Improved testing protocols for future demonstrations

- Enhanced backup systems for live presentations

Lessons for the Tech Industry

The Reality of Live Demonstrations

The Meta incident serves as a reminder that live tech demonstrations carry inherent risks, regardless of how well-tested the technology might be in controlled environments. Major tech companies including Apple, Google, and Microsoft have all experienced similar challenges during high-profile product launches.

Key lessons for the industry include:

Preparation and Contingency Planning: Companies must develop robust backup plans and alternative demonstration methods to handle unexpected technical failures.

Honest Communication: When things go wrong, transparent communication about the actual causes builds more trust than convenient excuses.

Testing in Real-World Conditions: Laboratory testing cannot fully replicate the complexity and unpredictability of live demonstration environments.

Managing Expectations: Setting realistic expectations about early-stage technology helps audiences understand the developmental nature of cutting-edge products.

The Human Element in Technology

The most revealing thing said on stage during the clunky demo was the excuse offered by both Mark Zuckerberg and Mancuso in the face of the smart glasses’ shaky operability. This human response to technical failure illustrates how even the most sophisticated technology companies must navigate the gap between innovation and reliable execution.

What This Means for Consumers

Realistic Expectations for AI Wearables

The demonstration failures provide valuable insights for consumers considering AI-powered wearable devices. While the technology shows tremendous promise, current limitations suggest that:

- Early adoption may involve tolerating occasional glitches and limitations

- AI assistants in wearable form factors are still evolving

- Integration challenges between hardware and software persist

- Real-world performance may vary from marketing demonstrations

The Future of Smart Glasses

Despite the demo setbacks, the underlying technology in Meta’s smart glasses represents significant advancement in several areas:

- Computer vision capabilities for real-world object recognition

- Natural language processing for conversational AI interactions

- Miniaturization of complex computing systems

- Battery efficiency in compact wearable form factors

Industry Competition and Market Dynamics

The Smart Glasses Race

Meta’s challenges in the smart glasses market reflect broader industry dynamics as multiple companies compete to create the first mainstream augmented reality wearable device. Competitors including Apple, Google, Microsoft, and various startups are all working on similar technologies, each facing their own technical and market challenges.

The race to market involves several critical factors:

- Technical reliability and consistent performance

- User experience design and intuitive interfaces

- Battery life and practical usability

- Privacy and security considerations

- Price points that appeal to mainstream consumers

Learning from Setbacks

The Meta demonstration failure, while embarrassing, provides valuable learning opportunities for the entire industry. Public failures often accelerate innovation by highlighting specific areas that need improvement and encouraging more robust testing and development practices.

FAQs About Meta’s Smart Glasses Demo Failure

Q: Was the Wi-Fi really to blame for Meta’s smart glasses demo failure?

Ans: No, Meta’s CTO later revealed the actual cause was a “race condition” bug in the software, not Wi-Fi connectivity issues. The Wi-Fi explanation was an immediate excuse during the live demonstration.

Q: How much will Meta’s new smart glasses cost?

Ans: The Meta Ray-Ban Display glasses are expected to cost around $800, positioning them in the premium wearable technology market.

Q: What features do the new Meta smart glasses have?

Ans: The glasses include 3K video recording, a 12-megapixel wide-angle camera, IP67 water resistance, integrated AI assistant, and live AI functionality for interactive experiences.

Q: Are the demo failures indicative of product quality issues?

Ans: According to Meta’s CTO, these were demonstration-specific failures rather than fundamental product problems. The race condition bug had not been encountered in previous testing.

Q: When will Meta’s smart glasses be available to consumers? A: Meta has not announced specific availability dates, but the company continues development following the Connect 2025 demonstration.

Q: How do these failures compare to other tech company demo problems? A: Live demonstration failures are common across the tech industry, with Apple, Google, Microsoft, and other major companies having experienced similar issues during product launches.

Q: What is a race condition bug? A: A race condition bug occurs when multiple software processes try to access the same resource simultaneously, creating unpredictable outcomes based on timing rather than proper coordination.

Conclusion: Learning from Failure in Tech Innovation

Mark Zuckerberg’s Meta AI smart glasses demo failure and the subsequent Wi-Fi blame excuse offer valuable insights into the challenges facing cutting-edge technology development. While the immediate response of blaming connectivity issues may have seemed like damage control, Meta’s later transparency about the actual technical causes demonstrates a more mature approach to handling setbacks.

The incident highlights the gap between laboratory success and real-world performance that all technology companies must navigate. Rather than diminishing confidence in Meta’s capabilities, these challenges should be viewed as natural parts of the innovation process, particularly when dealing with complex AI systems and wearable technology integration.

For consumers and industry observers, the key takeaway is the importance of realistic expectations when evaluating emerging technologies. The smart glasses market continues to evolve, with each setback providing learning opportunities that ultimately lead to more robust and reliable products.

Ready to stay informed about the latest developments in AI wearable technology? Keep following Meta’s progress with their smart glasses development, and remember that today’s demonstration failures often become tomorrow’s breakthrough successes. The future of augmented reality and AI-powered wearables remains bright, built on the foundation of lessons learned from both successes and failures like this one.